Hot, buggy and far too slow ???

SemiAccurate :: SemiAccurate gets some GTX480 scores

February 20, 2010

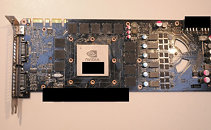

NVIDIA HAS BEEN hinting about the performance of its upcoming GTX480 cards, and several of our moles got a lot of hands on time with a few cards recently. If you are waiting for killer results from the 'puppy', prepare for severe disappointment.

The short story about the woefully delayed GTX480, and it's little sibling the GTX470 is that it is far slower than Nvidia has been hinting at, and there is a lot of work yet to be done before it is salable. Our sources have a bit of conflicting data, but the vast majority of the numbers line up between them.

Small numbers of final cards have started to trickle in to Nvidia, and it is only showing them to people it considers very friendly for reasons that we will make clear in a bit. Because of the small circle of people who have access to the data we are going to blur a few data points to protect our sources. That said, on with the show.

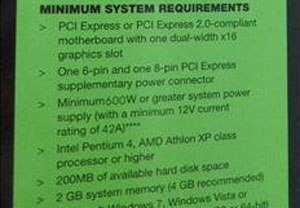

There are two cards, the GTX480 having the full complement of 512 shaders, and the GTX470 with only 448, which is 64 less for the math impaired. The clocks for the 480 are either 600MHz or 625MHz for the low or half clock, and double that, 1200MHz or 1250MHz for the high or hot clock. Nvidia was aiming for 750/1500MHz last spring, so this is a huge miss. This speed is the first point the sources conflict on, and it could go either way, since both sources were adamant about theirs being the correct final clock. *sigh*.

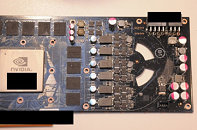

On the GTX470 side, there are 448 shaders, and the clocks are set at 625MHz and 1250MHz in both cases. If the GTX480 is really at 600Mhz and 1200MHz, and the GTX470 is slightly faster, it should really make you wonder about the thermals of the chip. Remember when we said that the GF100 GTX480 chip was having problems with transistors at minimal voltages? Basically Nvidia has to crank the voltages beyond what it wanted to keep borderline transistors from flaking out. The problem is that this creates heat, and a lot of it. Both of our sources said that their cards were smoking hot. One said they measured it at 70C at idle on the 2D clock.

The fans were reported to be running at 70 percent of maximum when idling, a number that is far, far too high for normal use. Lets hope that this is just a BIOS tweaking issue, and the fans don't need to be run that fast. It would mean GF100 basically can't downvolt at all on idle. On the upside, if it's any comfort, the noise from the fans at that speed was said to be noticeable, but not annoying.

If you are wondering why Nvidia made such a big deal about GF100 GTX480 certified cases, well, now you know. Remember, higher temperatures mean more leakage, which means more heat, and then the magic smoke that makes transistors work gets let out in a thermal runaway. You simply have to keep this beast cool all the time.

While this backs up many of the theories on how Nvidia lost so much clock speed, it isn't conclusive. The take home message is that this chip has some very serious thermal problems, and Nvidia is in a box when it comes to what it can do to mitigate the problem.

Now that you know the raw clocks, how does it perform? It is a mixed bag, but basically the cards are much below Nvidia's original expectations publicly stated as 60 percent faster than Cypress. The numbers that SemiAccurate were told span a variety of current games, all running at very high resolutions. Here is where we can't list specifics or the Nvidia Keystone Kops might find their first SemiAccurate mole. We will bring you the full spreadsheets when the cards are more widespread.

The GTX480 with 512 shaders running at full speed, 600Mhz or 625MHz depending on which source, ran on average 5 percent faster than a Cypress HD5870, plus or minus a little bit. The sources were not allowed to test the GTX470, which is likely an admission that it will be slower than the Cypress HD5870.

There is one bright spot, and it is a very bright spot indeed. No, not the thermal cap of the chip, but the tessellation performance in Heaven. On that synthetic benchmark, the numbers were more than twice as fast as the Cypress HD5870, and will likely beat a dual chip Hemlock HD5970. The sources said that this lead was most definitely not reflected in any game or test they ran, it was only in tessellation limited situations where the shaders don't need to be used for 'real work'.

Update: The games tested DID include DX11 games, and those are still in the 5% range. Heaven uses tessellation in a way that games can not, Heaven can utilize far more shaders for tessellation than a normal game can, they have to use them for, well, the game itself. The performance of Heaven on GTX480 was not reflected in any games tested by our sources, DX9, 10, 10.1 or 11.

The GF100 GTX480 was not meant to be a GPU, it was a GPGPU chip pulled into service for graphics when the other plans at Nvidia failed. It is far too math DP FP heavy to be a good graphics chip, but roping shaders into doing tessellation is the one place where there is synergy. This is the only place where the GTX480 stood out from a HD5870. The benchmarks that Nvidia showed off at CES were hand-picked for good reason. They were the only ones that Nvidia could show a win on, something it really needs to capture sales for this card and its derivatives, if any.

There was one problem that the sources pointed to, on Heaven, which was that the benchmark had many visible and quite noticeable glitches. If you were wondering why Nvidia only showed very specific clips of it at CES, that is why. DX11 isn't quite all there yet for the GTX480. This is probably why we have been hearing rumors of the card not having DX11 drivers on launch, but we can't see Nvidia launching the chip without them.

Getting back to the selective showings of the GTX480, there is a good reason for it. The performance is too close to the HD5870, so Nvidia will be forced to sell it at HD5870 prices, basically $400. The GPU isn't a money maker at this price point, and at best, Nvidia can price it between the $400 HD5870 and the $600 HD5970. The only tools left to deal with this issue are PR and marketing as the chip is currently in production.

If potential buyers get a wide range of benchmarks and correct specs, the conclusion will likely be that the GTX480 equals the HD5870 in performance. There will be no reviews based upon cards purchased in the wild for months. The way Nvidia has dealt with this in the past has been to control who gets access to cards and to hand pick the ones sent out.

If you give the GTX480 to honest journalists, they will likely say that the two cards, the GTX480 and the HD5870, show equivalent performance, so we hear Nvidia is doing its best to keep the GTX480 out of the hands of the honest. This means that only journalists who are known to follow the "reviewer's guide" closely, are willing to downplay the negatives, and will hit the important bullet points provided by Nvidia PR will be the ones most likely to gain early access to these cards. If this sounds unethical to you, it is, and it's not the first time. This is exactly what Nvidia did to cut Anand, Kyle and several others out of the GTS250 at launch. That worked out so well the last time that Nvidia will probably try it again. Expect fireworks when some people realize that they have been cut out for telling the truth.

The end result is that the GTX480 is simply not fast enough to deliver a resounding win in anything but the most contrived benchmark scenarios. It is well within range of a mildly upclocked HD5870, which is something that ATI can do pretty much on a whim. The GTX480 can barely beat the second fastest ATI card, and it doesn't have a chance at the top.

GTX480 is too hot, too big, too slow, and lacks anything that would recommend it over a HD5870, much less the vastly faster HD5970. Nvidia is said to be producing only 5,000 to 8,000 of these cards, and it will lose money on each one. The architecture is wrong, and that is unfixable. The physical design is broken, and that is not fixable in any time frame that matters. When you don't have anything to show, spin. Nvidia is spinning faster than it ever has before.

SemiAccurate :: SemiAccurate gets some GTX480 scores