Navigation section

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Windows 7 AMD's Evergreen DX11 GPUs Set to Launch September 10: What to Expect at QuakeCon

If it were my choice to be rich then let it be, other than that I would like to own both makers, nvidia & ati, 2 x ati in xover and the other 2 x nvidia sli, same too be nice to own newest amd and intel core processor's. not picking sides but from my POV I am trying to get the best bang for buck, then again am not rich~!

Y not have both'em in the world .

.

Back to dx11 .

.

Y not have both'em in the world

Back to dx11

cybercore

New Member

- Joined

- Jul 7, 2009

- Messages

- 15,641

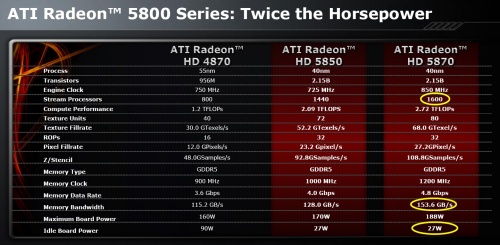

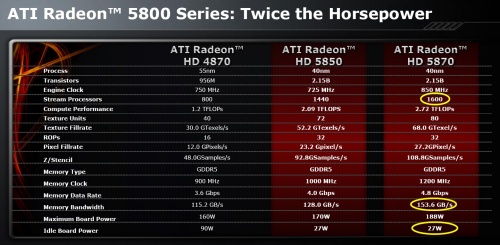

I like this one too:

ATI Radeon HD 5870 Review - TechSpot

Making x2, x3, and x4 times wider bus enhances the overall speed more effectively than just clocking GPU/Memory. I think ATI's architecture is not ready for wider buses, and that's where they lost. As well as all of us cause weaker competition won't force NVIDIA to charge less.

ATI Radeon HD 5870 Review - TechSpot

Making x2, x3, and x4 times wider bus enhances the overall speed more effectively than just clocking GPU/Memory. I think ATI's architecture is not ready for wider buses, and that's where they lost. As well as all of us cause weaker competition won't force NVIDIA to charge less.

cybercore

New Member

- Joined

- Jul 7, 2009

- Messages

- 15,641

Looks not bad at all:

Benchmarks

Overclocking Performance

Power Consumption & Temperatures

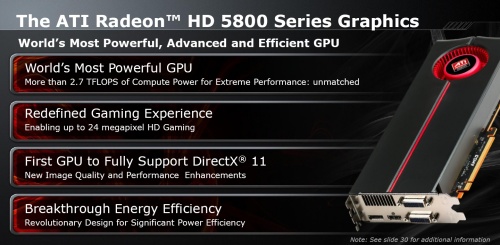

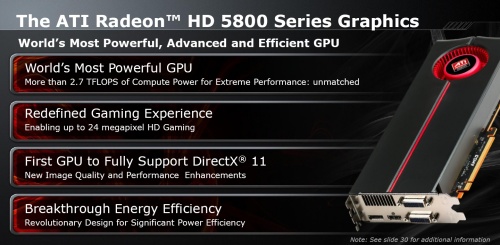

Overall, the Radeon HD 5870 has proven to be a real winner, and possibly one the best graphics cards ever reviewed in this price point. It is the fastest single-GPU graphics card you can purchase today, the power consumption levels are excellent, and hopefully with better software support overclocking will improve as well.

Benchmarks

Overclocking Performance

Power Consumption & Temperatures

Overall, the Radeon HD 5870 has proven to be a real winner, and possibly one the best graphics cards ever reviewed in this price point. It is the fastest single-GPU graphics card you can purchase today, the power consumption levels are excellent, and hopefully with better software support overclocking will improve as well.

cybercore

New Member

- Joined

- Jul 7, 2009

- Messages

- 15,641

This is weird... I answered your above question about 10mins ago....where's the post gone?

It's on the first page of this thread.

Oh well, it must be me. Too early yet lol.

In answer to your question... It's the DX11 stuff I guess..

I definitely like DX11 as well as the low temperatures and the high performance.

kemical

Essential Member

- Joined

- Aug 28, 2007

- Messages

- 36,176

- Thread Author

- #110

No I posted almost exactly the same message as the last one but it didn't appear.....weird..

Oh well, it's no biggie.. I did go on to say that if they reached the price of around £200 by xmas/jan then I might splash the cash..

Oh well, it's no biggie.. I did go on to say that if they reached the price of around £200 by xmas/jan then I might splash the cash..

kemical

Essential Member

- Joined

- Aug 28, 2007

- Messages

- 36,176

- Thread Author

- #111

Radeon HD 5870 X2 runs through CryEngine 3 like butter

Written by Jon Worrel

Monday, 28 September 2009 04:15

Efficient use of multithreading with DirectX 11

During AMD’s DirectX 11 training sessions last week, the company brought out its upcoming flagship HD 5800 series card under the Hemlock codename, the card which will also be the top model in its entire 40nm Evergreen series.

This multi-GPU beast more appropriately known as Radeon HD 5870 X2 was shown in a meeting room running CryTek’s latest CryEngine 3 development build at very smooth framerates. More specifically, the engine was running at 1920x1200 with AA turned off (as CryEngine has never needed manual AA) and the Screen Space Ambient Occlusion (SSAO) rendering technique was significantly more pronounced than in Crysis and Crysis Warhead.

According to several AIB partner sources, the Radeon HD 5870 X2 is expected to launch at less than $500. We are somewhat skeptical about this pricing strategy because it would assume that AMD would be selling two 40nm chips from TSMC at a significant marginal loss. In comparison, the HD 4870 launched last summer at $299 while the HD 4870 X2 launched at $549. If AMD is going to make any profit, we presume that this card should sell for at least $599. As the release date approaches, we will be able to confirm pricing details on both of the Hemlock cards including the HD 5850 X2.

More pictures of the giant 12+ inch card can be found here.

Link Removed due to 404 Error

Written by Jon Worrel

Monday, 28 September 2009 04:15

Efficient use of multithreading with DirectX 11

During AMD’s DirectX 11 training sessions last week, the company brought out its upcoming flagship HD 5800 series card under the Hemlock codename, the card which will also be the top model in its entire 40nm Evergreen series.

This multi-GPU beast more appropriately known as Radeon HD 5870 X2 was shown in a meeting room running CryTek’s latest CryEngine 3 development build at very smooth framerates. More specifically, the engine was running at 1920x1200 with AA turned off (as CryEngine has never needed manual AA) and the Screen Space Ambient Occlusion (SSAO) rendering technique was significantly more pronounced than in Crysis and Crysis Warhead.

According to several AIB partner sources, the Radeon HD 5870 X2 is expected to launch at less than $500. We are somewhat skeptical about this pricing strategy because it would assume that AMD would be selling two 40nm chips from TSMC at a significant marginal loss. In comparison, the HD 4870 launched last summer at $299 while the HD 4870 X2 launched at $549. If AMD is going to make any profit, we presume that this card should sell for at least $599. As the release date approaches, we will be able to confirm pricing details on both of the Hemlock cards including the HD 5850 X2.

More pictures of the giant 12+ inch card can be found here.

Link Removed due to 404 Error

kemical

Essential Member

- Joined

- Aug 28, 2007

- Messages

- 36,176

- Thread Author

- #112

GT300 is codenamed Fermi

Written by Fuad Abazovic

Monday, 28 September 2009 09:46

Named after a nuclear reactor

The chip that we ended up calling GT300 has internal codename Fermi. The name might suit it well as Enrico Fermi was the chap that came up with the first nuclear reactor.

The new Nvidia chip is taped out and running, and we know that Nvidia showed it to some important people. The chip should be ready for very late 2009 launch. This GPU will also heavily concentrate on parallel computing and it will have bit elements on chip adjusted for this task. Nvidia plans to earn a lot of money because of that.

The chip supports GDDR5 memory, has billions of transistors and it should be bigger and faster than Radeon HD 5870. The GX2 dual version of card is also in the pipes. It's well worth noting that this is the biggest architectural change since G80 times as Nvidia wanted to add a lot of instructions for better parallel computing.

The gaming part is also going to be fast but we won’t know who will end up faster in DirectX 11 games until we see the games. The clocks will be similar or very close to one we've seen at ATI's DirectX 11 card for both GPU and memory but we still don't know enough about shader count and internal structure to draw any performance conclusions.

Of course, the chip supports DirectX 11 and Open GL 3.1

Link Removed due to 404 Error

Written by Fuad Abazovic

Monday, 28 September 2009 09:46

Named after a nuclear reactor

The chip that we ended up calling GT300 has internal codename Fermi. The name might suit it well as Enrico Fermi was the chap that came up with the first nuclear reactor.

The new Nvidia chip is taped out and running, and we know that Nvidia showed it to some important people. The chip should be ready for very late 2009 launch. This GPU will also heavily concentrate on parallel computing and it will have bit elements on chip adjusted for this task. Nvidia plans to earn a lot of money because of that.

The chip supports GDDR5 memory, has billions of transistors and it should be bigger and faster than Radeon HD 5870. The GX2 dual version of card is also in the pipes. It's well worth noting that this is the biggest architectural change since G80 times as Nvidia wanted to add a lot of instructions for better parallel computing.

The gaming part is also going to be fast but we won’t know who will end up faster in DirectX 11 games until we see the games. The clocks will be similar or very close to one we've seen at ATI's DirectX 11 card for both GPU and memory but we still don't know enough about shader count and internal structure to draw any performance conclusions.

Of course, the chip supports DirectX 11 and Open GL 3.1

Link Removed due to 404 Error

kemical

Essential Member

- Joined

- Aug 28, 2007

- Messages

- 36,176

- Thread Author

- #113

With a relatively successful launch of the HD 5870 under their belt (unless you take into account availability), ATI is gearing up for act two of the play.

The ATI Radeon HD 5870 x2 is supposedly due out sometime in October, and with the single GPU 5870 performing so well, one can only speculate at the power the dual GPU version might wield. The 5870 x2 is on track to become the best performing card on the market if it can properly scale, however one of the downfalls of the card may be its sheer size.

The main article can be found here:

Link Removed due to 404 Error

Many piccies...

The ATI Radeon HD 5870 x2 is supposedly due out sometime in October, and with the single GPU 5870 performing so well, one can only speculate at the power the dual GPU version might wield. The 5870 x2 is on track to become the best performing card on the market if it can properly scale, however one of the downfalls of the card may be its sheer size.

The main article can be found here:

Link Removed due to 404 Error

Many piccies...

kemical

Essential Member

- Joined

- Aug 28, 2007

- Messages

- 36,176

- Thread Author

- #115

Very nice post, kemical, do you plan to upgrade to 5870 ?

Depends on prices but yes I do plan on going DX11 at some point. I bought a DX10 card as soon as they were released and felt like I got burned a little. So this time I'll wait for the prices to drop although I think the DX11 roll out will be a little more successful than DX10 was. Besides we don't have to contend with a half built os for one thing..

cybercore

New Member

- Joined

- Jul 7, 2009

- Messages

- 15,641

Link Removed

DirectX 11: More Notable Than DirectX 10? - Review Tom's Hardware : ATI Radeon HD 5870: DirectX 11, Eyefinity, And Serious Speed

Link Removed due to 404 Error

Direct3D 11 features:

Tessellation — to increase at runtime the number of visible polygons from a low detail polygonal model

Multithreaded rendering — to render to the same Direct3D device object from different threads for multi core CPUs

Compute shaders — which exposes the shader pipeline for non-graphical tasks such as stream processing and physics acceleration, similar in spirit to what OpenCL, NVIDIA CUDA, ATI Stream achieves, and HLSL Shader Model 5 among others.[15][16]

Other notable features are the addition of two new texture compression algorithms for more efficient packing of high quality and HDR/alpha textures and an increased texture cache.

The Direct3D 11 runtime will be able to run on Direct3D 9 and 10.x-class hardware and drivers,[15] using the D3D10_FEATURE_LEVEL1 functionality first introduced in Direct3D 10.1 runtime. [13] This will allow developers to unify the rendering pipeline and make use of API improvements such as better resource management and multithreading even on entry-level cards

Microsoft Direct3D - Wikipedia, the free encyclopedia

DirectX 11: More Notable Than DirectX 10? - Review Tom's Hardware : ATI Radeon HD 5870: DirectX 11, Eyefinity, And Serious Speed

I think I should do the same. My first impression is that both DX11 itself and the ATI 5870 are all worth it, but on the other hand I feel I should at least wait for NVIDIA DX11 chips. And as you mention a couple of good DX 11 games better come out first.

Link Removed due to 404 Error

Link Removed due to 404 Error

DirectX 11: More Notable Than DirectX 10? - Review Tom's Hardware : ATI Radeon HD 5870: DirectX 11, Eyefinity, And Serious Speed

Link Removed due to 404 Error

Direct3D 11 features:

Tessellation — to increase at runtime the number of visible polygons from a low detail polygonal model

Multithreaded rendering — to render to the same Direct3D device object from different threads for multi core CPUs

Compute shaders — which exposes the shader pipeline for non-graphical tasks such as stream processing and physics acceleration, similar in spirit to what OpenCL, NVIDIA CUDA, ATI Stream achieves, and HLSL Shader Model 5 among others.[15][16]

Other notable features are the addition of two new texture compression algorithms for more efficient packing of high quality and HDR/alpha textures and an increased texture cache.

The Direct3D 11 runtime will be able to run on Direct3D 9 and 10.x-class hardware and drivers,[15] using the D3D10_FEATURE_LEVEL1 functionality first introduced in Direct3D 10.1 runtime. [13] This will allow developers to unify the rendering pipeline and make use of API improvements such as better resource management and multithreading even on entry-level cards

Microsoft Direct3D - Wikipedia, the free encyclopedia

DirectX 11: More Notable Than DirectX 10? - Review Tom's Hardware : ATI Radeon HD 5870: DirectX 11, Eyefinity, And Serious Speed

So this time I'll wait for the prices to drop although I think the DX11 roll out will be a little more successful than DX10 was.

I think I should do the same. My first impression is that both DX11 itself and the ATI 5870 are all worth it, but on the other hand I feel I should at least wait for NVIDIA DX11 chips. And as you mention a couple of good DX 11 games better come out first.

Link Removed due to 404 Error

Link Removed due to 404 Error

kemical

Essential Member

- Joined

- Aug 28, 2007

- Messages

- 36,176

- Thread Author

- #118

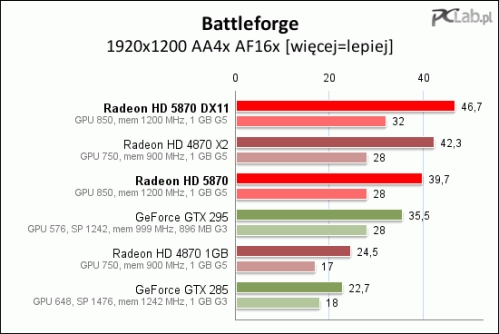

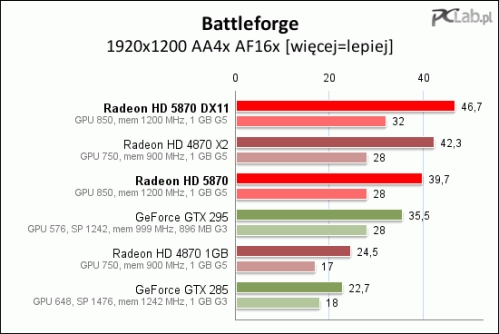

Battleforge works faster with DX 11 Link Removed due to 404 Error Link Removed due to 404 Error

Written by Link Removed due to 404 Error Monday, 28 September 2009 12:13

One down three to go

Battleforge can now be called the first DirectX 11 game, as thanks to the patch that guys from Phenomic has released and the DirectX-SDK dating from August, this game runs faster on DirectX 11.

According to the graph over at Pclab.pl the DirectX 11 patch was enough for the HD 5870 to be faster than Radeon HD 4870 X2 at 1920x1200 with 4xAA and 16xAF. The game doesn't bring any eye-candy and you can't exactly call it a full DirectX 11 game, but at least the patch brings some gain for the HD 5800 series, as it does indeed give few extra frames per second.

This is only the first of four games that AMD is waiting for in order to show the full potential of DirectX 11. However, Dirt 2, Alien vs. Predator and the new STALKER: Call of Pripyat game are sort of delayed as some will come by the end of this year while the rest should be out at the beginning of 2010.

You can find the Battleforge patch details Link Removed - Invalid URL, the DirectX-SDK can be found Link Removed, while the Pclab.pl graph can be found here.

Link Removed due to 404 Error

So it seems that the cards will come into their own once the games are released/patched..

Written by Link Removed due to 404 Error Monday, 28 September 2009 12:13

One down three to go

Battleforge can now be called the first DirectX 11 game, as thanks to the patch that guys from Phenomic has released and the DirectX-SDK dating from August, this game runs faster on DirectX 11.

According to the graph over at Pclab.pl the DirectX 11 patch was enough for the HD 5870 to be faster than Radeon HD 4870 X2 at 1920x1200 with 4xAA and 16xAF. The game doesn't bring any eye-candy and you can't exactly call it a full DirectX 11 game, but at least the patch brings some gain for the HD 5800 series, as it does indeed give few extra frames per second.

This is only the first of four games that AMD is waiting for in order to show the full potential of DirectX 11. However, Dirt 2, Alien vs. Predator and the new STALKER: Call of Pripyat game are sort of delayed as some will come by the end of this year while the rest should be out at the beginning of 2010.

You can find the Battleforge patch details Link Removed - Invalid URL, the DirectX-SDK can be found Link Removed, while the Pclab.pl graph can be found here.

Link Removed due to 404 Error

So it seems that the cards will come into their own once the games are released/patched..

kemical

Essential Member

- Joined

- Aug 28, 2007

- Messages

- 36,176

- Thread Author

- #119

Fermi spec is out

Nvidia Fermi spec is out, 512 shader cores Link Removed due to 404 Error Link Removed due to 404 Error

Written by Link Removed due to 404 Error Wednesday, 30 September 2009 17:28

3.0 billion transistors

Just hours before Nvidia decided to share the Fermi, GT300 specification with the world, we managed to get most of the spec and share it with you.

The chip has three billion transistors, a 384-bit memory interface and 512 shader cores, something that Nvidia plans to rename to Cuda cores.

The chip is made of clusters so the slower iterations with less shaders should not be that hard to spin off. Each cluster of the chip has 32 Cuda cores meaning that Fermi has 16 clusters.

Another innovation is 1MB of L1 cache memory divided into 16KB Cache - Shared Memory as well as 768KB L2 of unified cache memory. This is something that many CPUs have today and as of this day Nvidia GPUs will go down that road.

The chip supports GDDR5 memory and up to 6 GB of it, depending on the configuration as well as Half Speed IEEE 754 Double Precision. We also heard that the chip can execute C++ code directly and, of course, it's full DirectX 11 capable.

Nvidia’s next gen GPU is surely starting to look like a CPU. Looks like Nvidia is doing reverse Fusion and every generation they add some CPU parts on their GPU designs.

Link Removed due to 404 Error

Nvidia Fermi spec is out, 512 shader cores Link Removed due to 404 Error Link Removed due to 404 Error

Written by Link Removed due to 404 Error Wednesday, 30 September 2009 17:28

3.0 billion transistors

Just hours before Nvidia decided to share the Fermi, GT300 specification with the world, we managed to get most of the spec and share it with you.

The chip has three billion transistors, a 384-bit memory interface and 512 shader cores, something that Nvidia plans to rename to Cuda cores.

The chip is made of clusters so the slower iterations with less shaders should not be that hard to spin off. Each cluster of the chip has 32 Cuda cores meaning that Fermi has 16 clusters.

Another innovation is 1MB of L1 cache memory divided into 16KB Cache - Shared Memory as well as 768KB L2 of unified cache memory. This is something that many CPUs have today and as of this day Nvidia GPUs will go down that road.

The chip supports GDDR5 memory and up to 6 GB of it, depending on the configuration as well as Half Speed IEEE 754 Double Precision. We also heard that the chip can execute C++ code directly and, of course, it's full DirectX 11 capable.

Nvidia’s next gen GPU is surely starting to look like a CPU. Looks like Nvidia is doing reverse Fusion and every generation they add some CPU parts on their GPU designs.

Link Removed due to 404 Error